New in iOS 13 Accessibility – Voice Control and More

New APIs, Voice Control and other Accessibility Changes in iOS 13

When it comes to Mobile Accessibility, iOS is the Gold Standard, and iOS 13 brings more distance between it and its competitors. If you’re familiar with iOS Accessibility, these changes will feel like welcome additions. If you’re not familiar with iOS Accessibility, you should play around with the iOS Accessibility Settings menu for a bit before reading!

Many of the changes they’ve made effect and interact with existing technologies in meaningful ways, including:

- Allowing a system-wide configuration for auto-playing media.

- Voice Control: A brand new Assistive Technology.

- New APIs that make Custom Accessibility Actions easier for developers.

Voice Control

Imagine, you come back from deployment overseas. You lost the use of your hands in a combat operation. You start to realize how dependent you are on this ability. You find it difficult to order your family a pizza, text your children, maintain a blog, and collaborate effectively with peers. Wouldn’t it be great if you could easily control your phone with your voice?

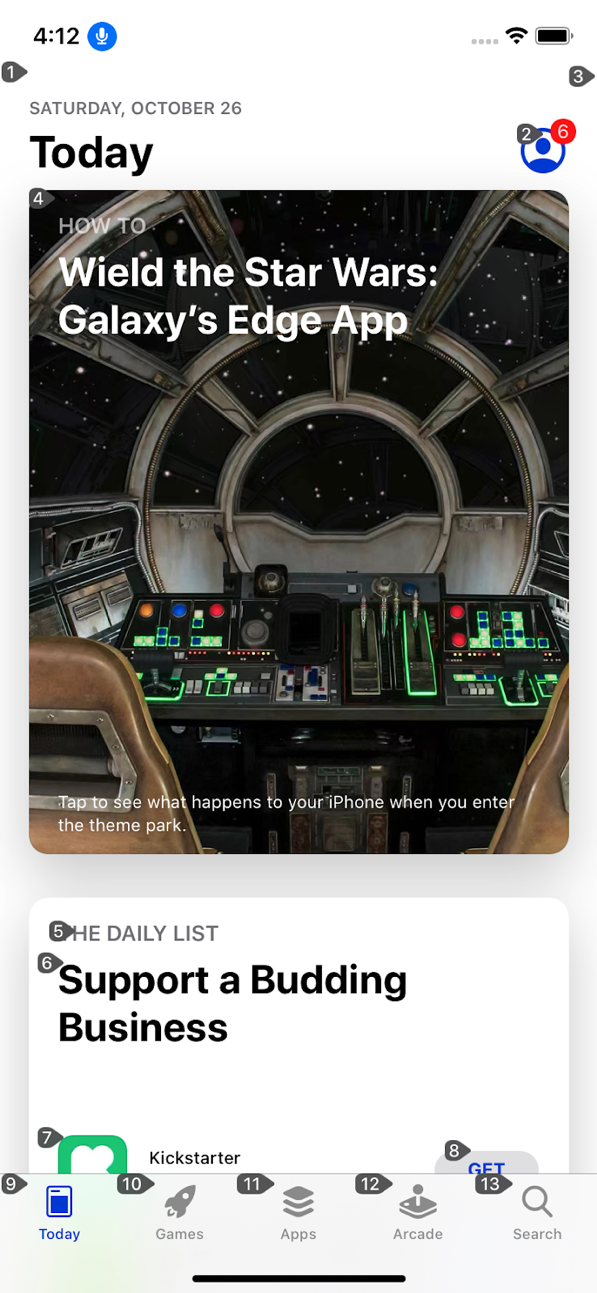

Voice Control is a technology aimed at helping iOS users with physical disabilities accomplish this. When Voice Control is on, an overlay appears with Numbers for each active element. You can then use these numbers to reference and act on individual controls. Voice Control lacks an effective “first time user” introduction, so discovering proper voice command format was a little difficult– at least by Apple Product usability standards.

The Voice Control commands I have successfully tested are:

- Show Names: Changes the overlay to display Names.

- Show Numbers: Changes the overlay to display Numbers.

- Tap 1: Clicks the first control on the screen.

- Tap “Apps”: Clicks the control labeled Apps.

- Increment {Number/Name}: Increments an adjustable control.

- Decrement {Number/Name}: Decrements an adjustable control.

- Go Home

- Go Back

- Show me what to say. ? Love this one!!!

I still want to attempt to utilize CustomAccessibilityActions from VoiceControl and perhaps attempt to explore deeply interactive controls (think: carousels or calendar widgets). However, my experience so far with Voice Control has been great. High learning curve aside, Voice Control is an awesome addition to the iOS Accessibility family.

Watch me use VoiceControl here for some quick and easy accessibility testing:

iOS Accessibility Testing: Voice Control to the Rescue!

One of the things I love about iOS and Voice Control is the potential it has to simplify the iOS Accessibility Testing Process. Below are a few problems I have with the way the testing process is now:

- As a developer, toggling VoiceOver On and Off wastes precious seconds to check for trivial things. Ex: Was that label added properly?

- As an Accessibility Tester, the process becomes repetitive, and any time I can save for every screen I test is precious.

- In particular, ensuring that every actionable control can be activated by assistive technologies is painfully slow!

Voice Control can significantly help both of these scenarios! Let’s talk about a couple of specific examples.

Speed Up Focus Order Testing (WCAG 2.4.3)

One of the aspects of Accessibility Testing that consumes a lot of time is Focus Order testing. Painstakingly ensuring that every item on a screen is focusable by VoiceOver and SwitchControl is difficult and takes a lot of time, even for experienced testers. If only we had something better! Wait for it…

While playing around with Voice Control the following has become obvious:

- Everything you can activate in Switch Control you can activate in Voice Control.

- Everything you can activate in Voice Control you can activate in Switch Control.

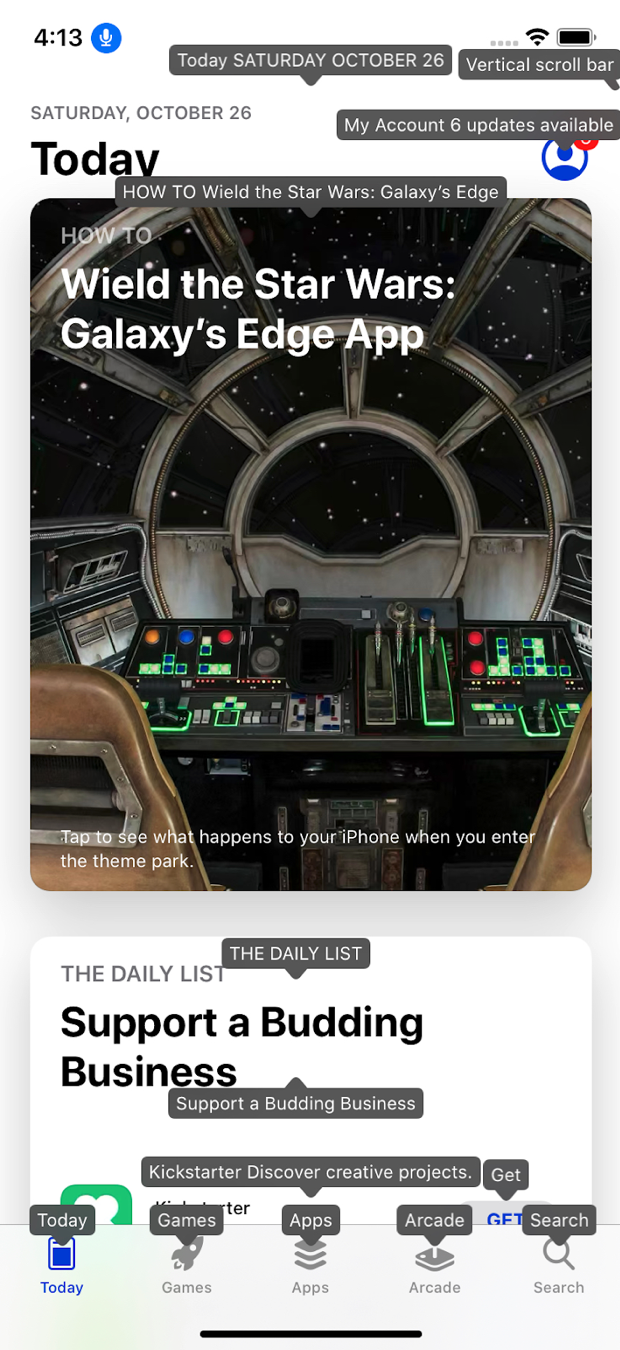

We can take advantage of this information in Accessibility Testing! Imagine taking a glance at one image and knowing that every VoiceOver, Switch Control, and Voice Control user can activate every control in your application. Well here you go:

From this image alone we can conclude that every control is:

- Actionable in VoiceOver

- Actionable in Switch Control

- Actionable in Voice Control

- AND there are no blatant focus order issues

Voice Control testing is faster than Switch Control testing. However, Switch Control still has its own set of considerations, particularly involving control groupings and usability.

Know your content and your users and test with whichever technology makes sense for you, but once you have tested with one the other becomes a significantly lower priority.

iOS Accessibility Testing for Developers

I get this question all the time:

“What testing should be done as part of the development process and what should be left for Accessibility Experts?”

My answer has always been that developers should:

- Make sure every active control has a descriptive name and can be activated.

- Perform automated Accessibility Tests.

Asking developers to do full Accessibility Assessments is not reasonable. I work with many brilliant developers here at Deque who have an awesome passion for Accessibility. We live and breathe this stuff here. Maybe a handful of them could do an Accessibility Assessment that wouldn’t get laughed at by an Accessibility Expert. Even understanding what types of images need descriptions vs. what’s decorative is a decision that should be reviewed by an expert.

However, there is no reason an Application should get released with controls that cannot be activated by Assistive Technologies. This said, minimizing a developer’s time testing their application and maximizing their time writing code is important. This leads us to Voice Control… check out this beautiful screenshot below!

Remember what my first goal for developers was?

- Make sure every active control has a descriptive name and can be activated.

Voice Control makes checking for this trivial! The best part: Voice Control can be left on and not affect the standard use of the device. So a developer doesn’t have to Toggle it On and Off. This makes for a very quick test and an Accessibility Testing cycle that no developer can object to!

Conclusion: Every sighted developer should do basic Voice Control testing. There’s no reason not to. It trivially prevents the most severe set of blocking Accessibility issues you can find in iOS Applications.

Accessibility Setting: Disable Video Autoplay

iOS 13 introduces a new system-wide Accessibility Setting: the ability to disable autoplay videos. Also, for a blind person, there may not be much of a difference between a video and, say, a podcast. So, let’s expand this to any auto-playing media.

The ability to disable autoplay media System-Wide is a powerful thing for users with disabilities, and this subtle feature should not be dismissed. However, it also will take time for the development community to adopt this setting. This feature has been supported individually on many applications for a period of time– especially applications whose primary purpose is to display media, such as YouTube or Facebook.

Unifying these settings in a commonplace that is also easy for other applications to respect is very valuable. Especially since most other autoplay media is significantly less valuable than that of Facebook or YouTube(*cough* advertisements).

WCAG 1.4.2 Conformance Implications

This API directly relates to WCAG 1.4.2 – Audio Control. Quoting from the WCAG understanding document, “Individuals who use screen reading software can find it hard to hear the speech output if there is other audio playing at the same time.”

This setting gives users the ability to avoid this scenario. In WCAG grammar, respecting this setting is a Sufficient Technique for adhering to this success criteria.

In this developer’s opinion, NOT respecting this setting is a violation of this Success Criteria, though I’m sure WCAG purists would shoot me down on that one, as “alternative mechanisms” would remain conformant. At the very least, respecting the “isVideoAutoplayEnabled” setting is an iOS Best Practice and application developers should respect this setting before auto-playing any media for more than a few seconds.

Plus, it’s easier than maintaining your own mechanism for doing this, I mean, check out how easy this is:

UIAccessibility.isVideoAutoplayEnabled iOS API Documentation

If UIAccessibility.isVideoAutoplayEnabled {

// Autoplay annoying media

} else {

// Don’t autoplay annoying media.

}

New Developers API: UIAccessibilityCustomActionHandler

UIAccessibilityCustomAction.Handler iOS API Documentation

UIAccessibilityCustomActions are a powerful tool for those developing Accessibility iOS Applications. They allow you to associate multiple actions with one focusable target. This is great for allowing VoiceOver or Switch Control users to perform actions that would normally be performed by gestures, such as flicking the Contact Card of your arch-nemesis to the right to delete them. Muhahaha!!!

The new Handler API makes creating these actions easier. What before required an Associated Target and Selector is now a simple Closure. Just associate your custom Action with a Name and a Handler, and this Handler will be performed when VoiceOver users activate the action.

In technical terms we exchange this:

init(name: String, target: Any?, selector: Selector)

For this:

init(name: String, actionHandler: Handler)

While this is not a huge improvement, it does make this process easier. Also, it solidifies Apple’s commitment to an awesome Accessibility design pattern with a functional development approach that feels more modern.

Summary

There are no groundbreaking Accessibility changes in iOS 13. In particular, there are no significant VoiceOver updates. However, there are some welcomed tools and solid iterative improvements that show Apple’s commitment to users with disabilities. Also, Voice Control is a new, awesome tool and should become a part of every Application Development Team’s testing process.

Great intro, Chris. This is SO timely, when I was trying to grapple with the new iOS 13 a11y enhancements. Thank you so much!

Thanks Chris for this very interesting article.

iOS 13 is full of new features such as the Voice Control I found great but the problem I noticed deals with the accessibility custom actions you mentioned with the ‘UIAccessibilityCustomActionHandler’.

Custom actions being available on an accessibility element seem to be not announced anymore or randomly according to some parameters that are all but intuitive.

Some developers have already traced this problem like https://forums.raywenderlich.com/t/ios-accessibility-tutorial-making-custom-controls-accessible-raywenderlich-com/87100/2 or https://stackoverflow.com/questions/59307946/uiaccessibilityelement-accessibilitycustomactions-not-working-properly/59343457#59343457.

Do you know if there’s a kind of workaround because it’s really harmful for the iOS 13 VoiceOver users, please?… while everything seems to be still OK on iOS 12.

thank you for this one.

As a technologist and writer, it is difficult for me to admit this bias: I am all in on Apple’s platforms. At this point, I don’t think it is even possible for me to consider an Android product, let alone, recommend one. It is clear to me that the two companies have very different priorities. Google’s priorities are not aligned with my needs. And Apple’s are. For me, it is as simple as that.

Every time I get curious enough to glance over the fence to see just how green the grass is, Apple does something to make its own grass greener than ever. In this case, Apple has shown that accessibility is still a priority — perhaps more so than ever.