How axe Auditor alleviates 3 common manual testing pain points

Automated accessibility testing is a great first step in making your website or app accessible. It’s very effective to address the ‘low hanging fruit’ problems without having to be an accessibility expert. Additionally, it’s really easy to introduce automated testing tools like axe DevTools into your software development process.

However, automated testing will not provide full accessibility coverage. Full accessibility coverage ensures that all users can access all the information your site provides without difficulty. To get complete coverage, you’ll need to also perform Manual Accessibility Testing (testing that needs a human to complete using assistive technologies on multiple browsers and interfaces). To both experts and non-experts, however, manual testing comes with a few challenges:

- WCAG has a lot of Success Criteria: Manual testing requires knowledge of WCAG Success Criteria, which can range from 25 to over 50 criteria depending on conformance level

- Screen Readers take time and expertise to learn: Manual Testing requires testing with Screen Readers, and Screen Readers require expertise

- Various interpretations of WCAG/ Gray Areas: Some WCAG success criteria require context and interpretation to determine the issue and path to resolution

These are some reasons why manual accessibility testing is often seen as ‘painful’ or ‘complicated,’ and why lots of developers and QA testers hope to avoid it. But, with the help of the right tools, these common pain points can be alleviated. Below we will describe why these three challenges can make manual accessibility testing difficult, and how a testing tool like axe Auditor can make it much easier.

Pain point 1: WCAG has a lot of Success Criteria

The most widely-accepted set of accessibility standards is the Web Content Accessibility Guidelines (WCAG), created by the W3C. WCAG criteria are classified into various versions and levels. Versions include WCAG 1.0, 2.0, and most recently 2.1. Version 3.0 is actively under development at the time of publishing this post. Success criteria under each of these versions are further broken down into conformance levels A, AA, and AAA.

The most commonly-used set of guidelines today is WCAG 2.0 Level AA. However, WCAG 2.1 Level AA is increasingly becoming more popular and is Deque’s default standard for client audits because of its additional clarity and coverage. The following table helps to quantify the breadth of knowledge of WCAG success criteria required to be able to perform accessibility testing.

| WCAG Conformance Level | Number of Success Criteria included |

| WCAG 2.0 Level A | 25 |

| WCAG 2.0 Level AA | 38 (includes Level A) |

| WCAG 2.1 Level A | 30 |

| WCAG 2.1 Level AA | 50 (includes Level A) |

Note that this chart excludes Level AAA, which adds even more success criteria. So, depending on which WCAG conformance level you choose, you could reasonably expect to check somewhere between 25-50 Success Criteria when assessing the accessibility of your site or app. As you can see, a significant amount of familiarity and expertise required with WCAG success criteria to be able to perform accurate, full-coverage accessibility testing.

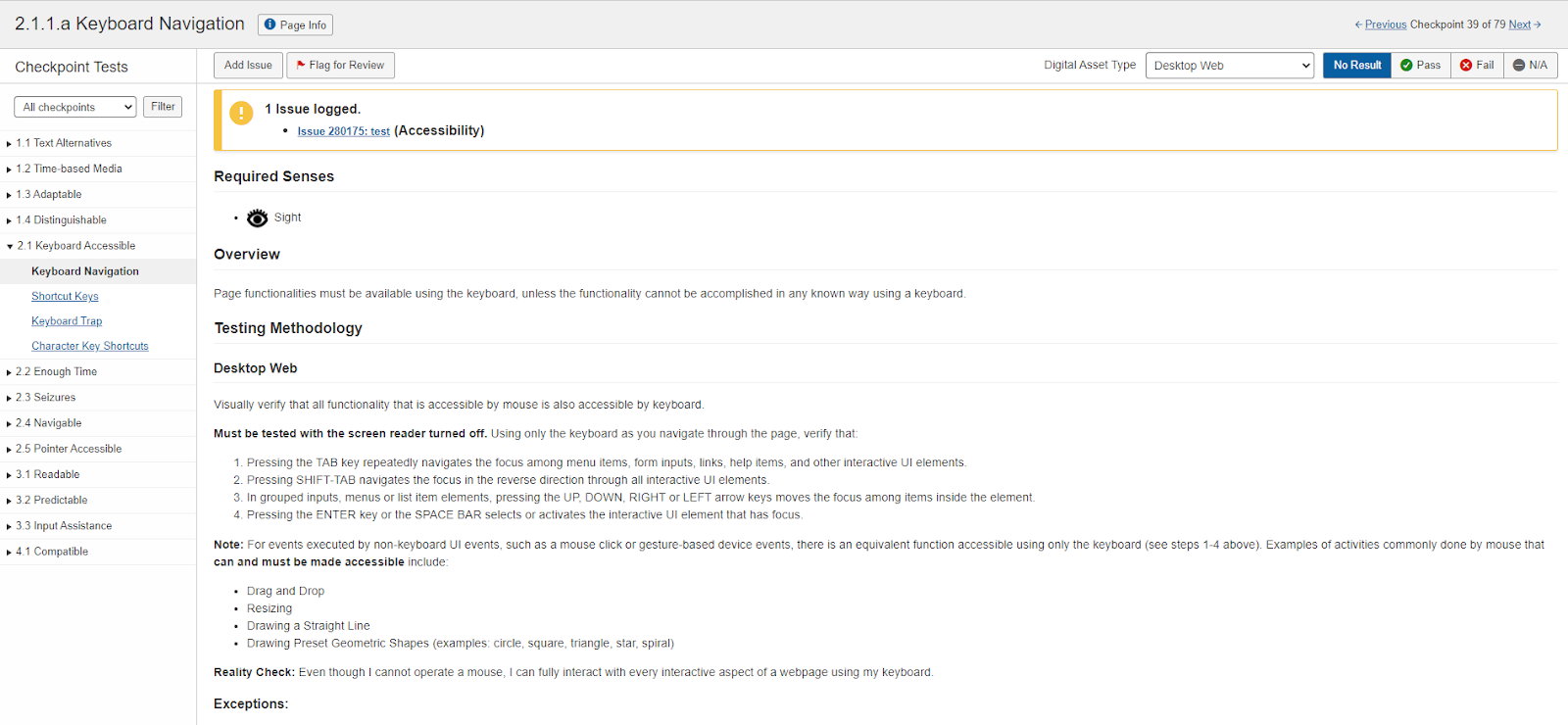

Employing a tool like axe Auditor can take the pressure off of your accessibility team to understand each success criterion because axe Auditor fully guides a user on how to test for specific criteria that you must manually test.

Axe Auditor not only provides you with the impact of each violation and how it impacts people with disabilities but it also provides step-by-step instructions on how to test for a specific issue, along with details describing any criteria that may require extra knowledge or context to test.

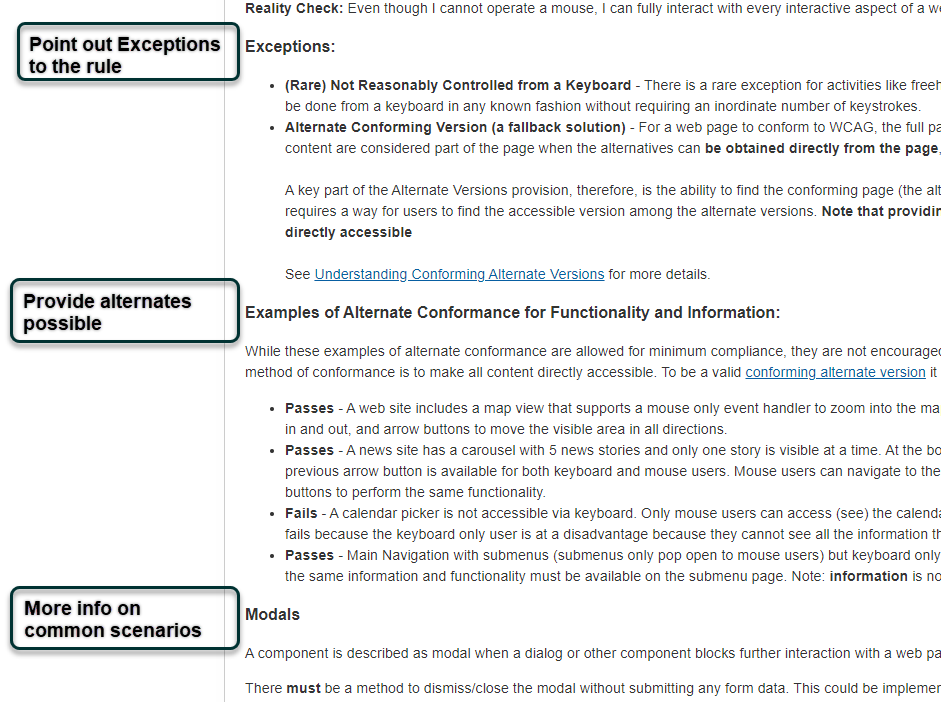

Additionally, axe Auditor guides you on how to identify the trickier items that could be marked as accessibility violations but are actually best practices for creating better user experiences. This can help your teams prioritize what issues are most important to fix. See below for an example of how axe Auditor guides you through a Keyboard Navigation checkpoint.

As you can see, axe Auditor provides the user with an example scenario of a user who interacts with a web page solely with their keyboard and then describes step-by-step instructions on how to address rare exceptions to the keyboard navigation checkpoint, examples of conformance alternatives, and more information on common scenarios where this keyboard checkpoint applies. This makes it easy for the tester to understand the greater impact of meeting the success criteria and then test to ensure the criteria are met on their website.

Pain Point 2: Manual Testing Requires Screen Reader Expertise

On top of understanding numerous Success Criteria, accessibility testers also need to understand how to use a Screen Reader. There are numerous Screen Readers available, the three most popular (in no specific order) being JAWS, NVDA, and VoiceOver. So, an accessibility tester must memorize 50 success criteria that need to be tested across three different Screen Readers and test across multiple testing environments (e.g. desktop, responsive web, mobile, etc.). There is a significant amount of training and expertise that is required for someone to learn how to test the success criteria with multiple Screen Readers in multiple environments.

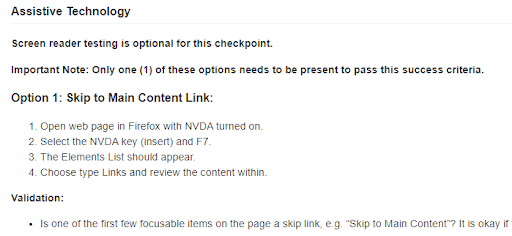

A tool like axe Auditor provides a step-by-step testing methodology that is customized by the chosen ruleset (WCAG, ACAA, Section 508, Trusted Tester v5, etc), page content, and testing environment (desktop, responsive, mobile, etc), thereby reducing the amount of memorization and prior experience tester needs to have in order to test effectively.

Because axe Auditor provides clear Screen Reader testing steps for each success criterion, it will help improve your testing efficiency and create a standardized testing process for your whole testing team to follow– whether they are a Screen Reader expert or not– resulting in a significant boost in your team’s productivity.

Pain point 3: Interpretation of WCAG Success Criteria requires expertise (and consistency)

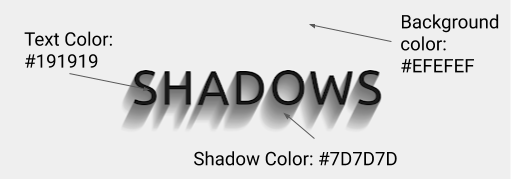

Some WCAG success criteria can be very contextual, so it can’t always be picked up with automated tools. Success Criteria that could seem very simple to test for, like Color Contrast requirements, can sometimes be tricky to analyze. Consider the image below for an example.

In this image, which two colors should be compared to verify whether the minimum color contrast requirements are satisfied or not? (For the purposes of this illustration, please ignore the fact that this is an image of text).

There are at least two combinations that could be considered:

- The light grey Background color (above each letter, hex code #EFEFEF), with black text color (hex code #191919)

- The dark grey Shadow color (below each letter, hex code #7D7D7D), with black text color (color code #191919)

In scenarios like this, different accessibility experts can (and will) have different opinions. With modern web technologies, designers have an immense ability to create new designs that have the potential to challenge accessibility experts in ever-changing ways.

For a developer who can get such opinions from multiple experts, there is nothing more frustrating than receiving different remediation requirements for the same issue. Imagine in the scenario above, where the first accessibility expert tells you that you need to make color changes compliant for a light grey background with black text. However, by the time the ticket is turned around and is ready for testing, another accessibility expert thinks that the minimum requirement is for the Shadow Color (below the text) to meet the color contrast ratio with the text color. This could introduce a significant delay in the release of the product as this ticket may have to go back to the designer for remediation, and could impact other color choices within the product.

The solution to this is to have clearly documented and detailed requirements to help reduce the number of issues that can be interpreted in different ways. Axe Auditor offers a complete testing methodology that is constantly evolving as new design techniques and patterns come out. If the entire team follows a properly-documented testing process through the tool, you can rest assured that the results coming back from the accessibility test team will be consistent, and also contain the necessary details required to address the issue. Moreover, your team will save time communicating the issues back and forth from the developer to the tester because the issues (and their solutions) don’t have to be custom made each time they’re found.

Summary: axe Auditor makes manual testing easier

Comprehensive accessibility testing expertise is a very specialized skill that takes years to develop and continuous training to keep up to date. With constantly changing technology, the scope of coverage required to achieve digital equality gets broader by the day. While automated testing tools have significantly improved their coverage for WCAG, there is still a significant need for manual testing to ensure that all the content is available to all to understand and use.

However, because of the breadth of coverage required, the experience needed to use a Screen Reader, and due to the variable interpretation of certain web content, it can be daunting to perform accessibility testing without introducing significant additional steps in the development process.

The good news is that manual accessibility testing doesn’t have to be an exercise in laborious content memorization. With the help of tools like axe Auditor, the majority of challenges associated with manual testing can be easily overcome. Axe Auditor can help alleviate the difficulty of working with dozens of success criteria by providing a straightforward path to resolution. It can also provide consistency in results generated by multiple accessibility test team members, streamlining your ticket-creation and improving communication between testers and developers. Finally, axe Auditor can provide the additional benefit of continuously training your accessibility team through repeat usage, thereby making them accessibility testing experts in the process.

If you’d like to learn more about how axe Auditor can help with manual accessibility testing, contact us for a demo.