The Cost of Accessibility False Positives

Pause: before you read this blog post it is important to understand what an accessibility false positive is. It will also be helpful to play around with Deque’s False Positive Calculator to see the impact of false positives. However, if you want a quick definition, a false positive is defined as:

Ac·ces·si·bil·i·ty False Pos·i·tive (noun) – an accessibility issue result that is mistaken result or is a duplicate issue that is created from a faulty automated accessibility tool.

“The false positives resulting from Karen’s automated accessibility testing tool can be attributed to her inexperience in interpreting WCAG violations. Karen should probably stick to creating hilarious Birdbox memes instead.”

Proceed: now that you have a solid understanding of accessibility false positives and have tested out the false positive calculator, let’s discuss the backstory and the importance of why it was made. I’ll also discuss my experience as an owner and leader of digital accessibility and why choosing the right automated tool was critical to creating a sustainable and scalable digital accessibility practice.

The Need for Automation in Enterprise Accessibility

As the owner and leader of an extensive, complex digital accessibility program at a Fortune 50 company, I was constantly challenged to do more with less. Indeed not an uncommon problem, but one – with the quick evolution of digital accessibility over the past few years – that can be a difficult nut to crack.

I started as many do, throwing bodies at the problem. My organization hired many highly trained and expensive subject matter experts to test the large number of pages and apps we had. This approach was sustainable to a certain point until – you guessed it – the “agile revolution” hit us, and everyone was urgently moving from a waterfall to an agile development approach. Waterfall had always given us plenty of time between production pushes. And, while not full-fledged agile – we moved to incremental updates, small focused teams, and much more frequent pushes to production.

As we progressed from 5 or 10 teams running agile to then 20, 30, and then exceeding 100, the old method of using subject matter expertise assessments during the software development life cycle was falling apart. The heavy realization hit that even if we had the funding, it was not feasible to staff an accessibility expert team of 100+ people. Even simple logistical questions such as “where the heck will they all sit” were impossible to solve.

Having had experience in other non-functional spaces including performance and security, I decided to learn from my experience (and mistakes) and use the tried and proven solution – software and automation! Luckily, about this time Deque and other companies were developing and marketing some outstanding automated accessibility products, and at least one by Deque was even provided as open source – the axe-core engine library.

Jumping headlong into software and automation, we were determined to move as far to the left as possible in the software development life cycle – concept and design were big targets. Automation came in early during the development process, our developers had access to axe and other paid automated tools that allowed for continuous build integration.

Not All Automated Tools Are Created Equal

Now with the simple push of a button or the integration of a build, we were provided with truckloads of information on accessibility defects – sometimes with up to 50% of the total defects we typically experienced were covered by the axe rules engine. With developers using the browser extension and API integration the goal was to address everything. When we conducted an automated scan of our website, we expected to get -zero- defect returns.

However, as we were making this light speed progress, we noticed that some entities – who will remain unnamed in this blog – were scanning our website and still reporting we had issues. What was the delta? It turns out that all automated scanning engines are not created equal. Whether you are using an open source solution or purchasing a product from a vendor, the thought and diligence put into the rules engine matters. It matters a lot.

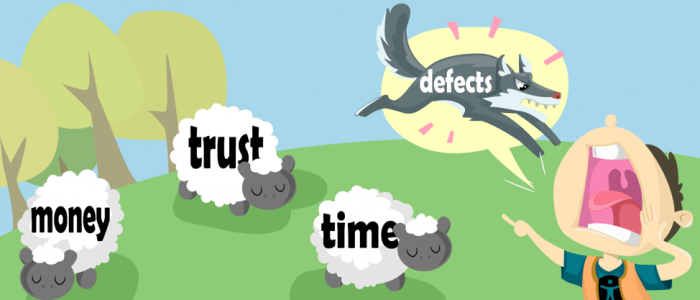

In the ecstasy of automated testing, you can get a lot of data on defects, and that data can become overwhelming. What is required at this stage is an industry leading rules engine that strives for zero false positives. We do not want anyone on our teams wasting time. In fact if developers are working issues that they must “undo” because of false positive information, you will lose their trust. So be careful what you pick when looking to automation for scaling of your accessibility program.

Luckily for us, we already had the industry-leading solution axe-core throughout all of the products we used – zero false positives (bugs notwithstanding)! Those entities I spoke of earlier were obviously not using axe, and eventually, we were able to determine what they were using and replicate their error-prone results. Had we used that engine or many others that lack “zero false positives” as a goal, we would have been wasting valuable time, resources, and money.

Creation of the False Positive Calculator

To illustrate how significant false positive information can be for your organization, I have provided a quick and easy to use web calculator to use for estimating. Taking some industry average numbers for exercises such as running an automated scan (30 seconds), discussing defects as a team (5 minutes), fixing defects (10 minutes), validating defects (5 minutes), and then possibly revert (because it wasn’t really a defect – it was a false positive!) you can demonstrate to your senior leadership in concrete dollars the importance of picking the best accessibility scanning engine possible.

Simply pop your company numbers into the calculator – blended hourly rate, number of team members in triage, average false positive rate, and the number of pages in scope for the effort to see the difference – tailored for your organization.

With some available products running 20% or more in false positives, that’s a great place to start in your calculations. Play with the numbers and move them around – but even if you push down to 5% false positives, it is still a materially significant number – and represents money, time, and resources that must move to other valuable work.

Deque is committed to continual improvement of axe-core and aligning it to the Web Content Accessibility Guidelines (WCAG), especially as WCAG evolves. Major IT organizations like Microsoft and Google have chosen axe-core as the best engine on the market today. Don’t let automation drive you into expensive and unnecessary work – pick the rules engine that does not lie – axe-core.

Really useful article Greg. I’ll certainly be using your “False Positive Calculator” with clients in future.