How do the new iOS 14 accessibility features stack up?

This past week, Apple released iOS 14 which includes new features such as Widgets, Back Tap and the ability for VoiceControl and VoiceOver to be used at the same time. I thought this would be the perfect time to bring our mobile team together to explore these new features of iOS 14 as they relate to accessibility.

Thanks to Jatin Vaishnav, Jennifer Dailey, Kate Owens and Robin Kanatzar from the Deque mobile team for all your help.

You can check out a video of our conversation here, or read a summary of our team’s raw reactions further down below. If you’d prefer to navigate the video using chapters, here are a few to help jump you into the relevant part of the video:

- 00:00 Intros

- 01:09 Reviewing home screen widgets

- 06:44 The team discusses the new backtap feature

- 12:42 What happens when Voice Over and Voice Control are running together?

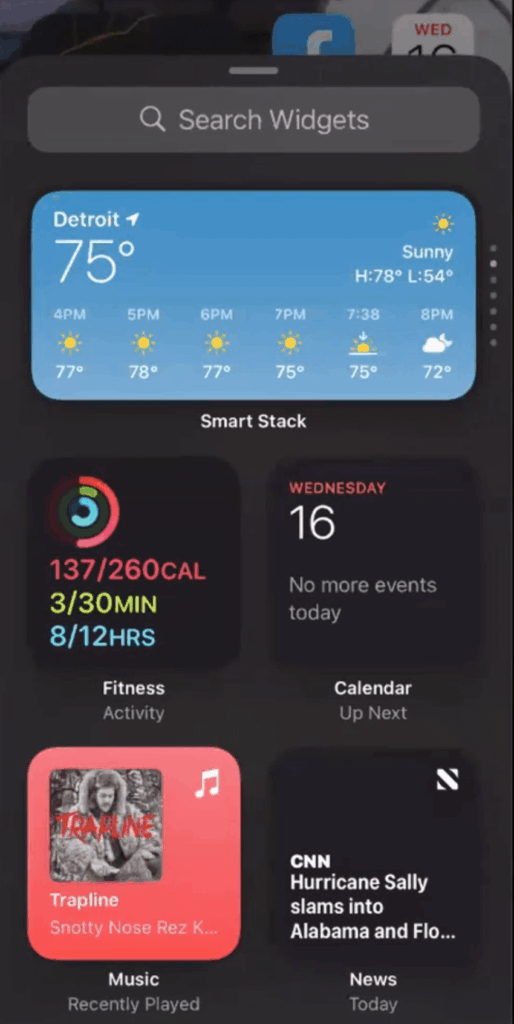

Widgets

Widgets make their debut on iOS with the release of iOS 14. You can now add and arrange widgets of various sizes on your home screen to have an easily-accessible snapshot of an app’s data. As an example, you can add a Weather App widget to the home screen which shows a summary of the temperature and weather conditions in your current city. Simply by glancing (or by focusing on this widget with an assistive technology) you learn that it is currently 75°F in Detroit, MI. In this way, widgets make it quicker and easier for you to get the information from an application that is important to you. While widgets are not explicitly an accessibility feature, we believe it has a positive effect on assistive technology users by allowing them to access pieces of an application without shifting context as much as if the user were navigating inside the application.

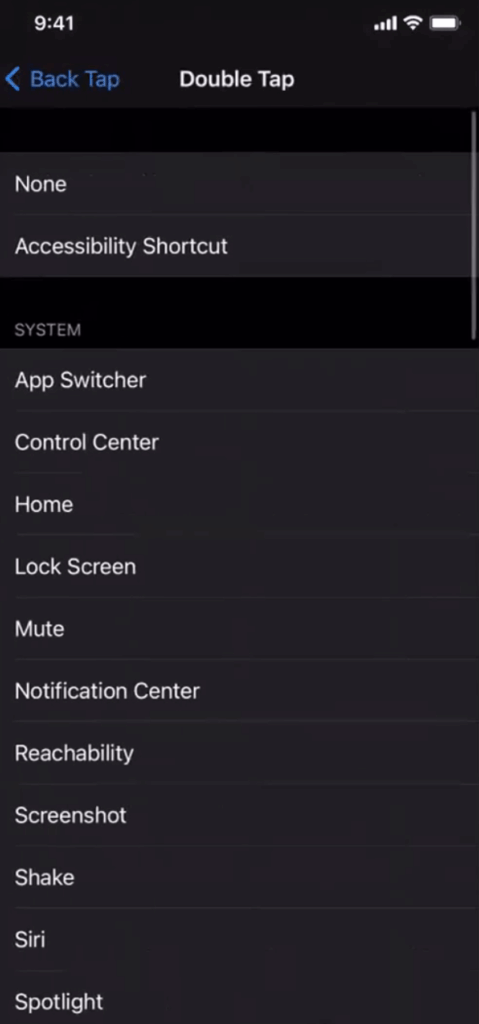

Back Tap

The new Back Tap feature available in iOS 14 allows you to set up actions that are triggered when you double tap or triple tap the back of your phone. A simple double tap to the back of your phone can start VoiceOver, or a triple tap can open the Control Center automatically. Apple provides a long list of actions to choose from when you set up Back Tap in the Accessibility menu of the Settings application. At first glance, we see Back Tap as a convenient way to help you perform common actions with simple tapping gestures. It will take more review to confirm that these gestures do not conflict with other assistive technologies, but our initial reaction says this is beneficial.

As a screen reader user, if I want to take a screenshot I can do it just by tapping it and then sending it for example with the Voice Recognition. This is amazing to give a quick, efficient way.

-Jatin

VoiceControl WITH VoiceOver…Together!?

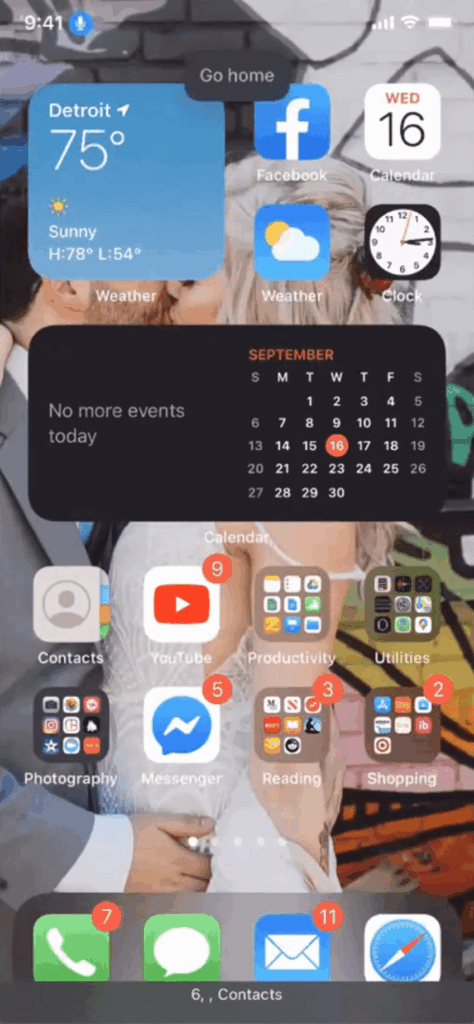

In iOS 14, Apple advertises the ability for two of its assistive technologies to work together: VoiceOver and VoiceControl. In the accessibility world, this is unusual to have two assistive technologies working together at the same time, so we were eager to test this combination on the home screen with widgets. Initially we noticed that there is a lot of information being presented. For example, VoiceOver reads through all the items on the home screen with the associated numbers to be used with VoiceControl: “1, Facebook, 2, Calendar, 3, Safari…”. With this much information being presented (imagine you have a very full home screen), it’s difficult to find a time to speak commands to VoiceControl.

We noticed a few other things that make us worry about this combination. First, when widgets were presented with VoiceOver, there seemed to be some role information missing from the announcement. Without a description of the role, a blind user risks being confused. Second, when we added a widget to the top left corner of the screen, we were missing a number to access that widget with VoiceControl. The number assignment for VoiceControl seems to begin after the first widget.

Moreover, this unusual combination of assistive technologies has left us wondering who is the target user base? What types of users would benefit from this combination? As a team, we do not recommend formally supporting this feature in your applications until Apple works out a few of these issues and until the intended user base is more clear. Our team will closely watch this feature and keep you all updated.

It is a lot of sensory information – I found that it can be hard to find a time to […] talk to VoiceControl while using it with VoiceOver on.”

-Kate

Conclusion

Like any OS update, this release of iOS 14 contains some features that are interesting for assistive technology users, and some features that are concerning. Widgets seem beneficial because of their ease of access to the most pertinent pieces of an application. In the same way, Back Tap provides another way to create shortcuts, allowing assistive technology users to use their devices more efficiently. In contrast, the combination of VoiceOver and VoiceControl is concerning. As a team, we hesitate, and we recommend waiting until Apple has ironed out a few things before supporting this in your own applications.

I don’t agree with the statements with voice control and voice over.

why you say?

There are people who have mobility and vision disabilities together which will help them with using this feature. also for us lazy blind people who want to control the phone via voice. Is it perfect, I will say not. But is a good step forward. The first group is who it truly benefits.